By Thomas Ultican 1/25/2020

Deformers in California are outraged. Along with Kansas it is one of only two states that do not use student growth models to measure school performance. In a 2019 brief published by Policy Analysis for California Education (PACE), USC Professor of Education Policy Morgan Polikoff writes,

“Based on the existing literature and an examination of California’s own goals for the Dashboard and the continuous improvement system, the state should adopt a student-level growth model as soon as possible. Forty-eight states have already done so; there is no reason for California to hang back with Kansas while other states use growth data to improve their schools.”

This may sound dire. California is getting left in the dust. After all, everybody else is doing it. However, there is a skunk or two in the wood pile.

PACE and the California Office of Reform Education (CORE) sound like official governmental organizations but they are in fact billionaire created institutions developed for the purpose of controlling public school policy. Today, PACE and CORE work closely together in pursuing disrupter agendas such as developing an inexpensive method for holding schools accountable. Growth models using standardized testing are being promoted in California and nationwide despite the evidence undermining their validity.

In 1982, using a grant from the Hewlett Foundation, Gerald Hayward, Michael Kirst and James Guthrie founded PACE. They originally called it “Policy Alternatives for California Education.” Since then they have replaced the term “Alternatives” with the word “Analysis.” PACE helped California’s department of education develop a blue Ribbon commission for the teaching profession in 1984. When republican governor George Deukmejian officially created the commission, PACE had solidified its position of influence within state education circles.

CORE was created in 2010 with two agendas; implementing common core and establishing the infrastructure of Competency Based Education (CBE). The original financing came from the Stuart Foundation which provided $700,000 in 2010 and $800,000 in 2011. At about the same time one of the minority owners of the San Francisco Giants, Phil Halperin, formed California Education Partners which became the administrative and fundraising arm of CORE.

Halperin also runs the Silver Giving Foundation, a philanthropy he founded with money amassed working as a partner in the Weston Presidio private equity firm. His biography on the Stanford Freeman Spogli Institute web site (he is an advisory board trustee) says that at Weston Halperin was, “focused on information technology, consumer branding, telecommunications and media, and that he previously worked at Lehman Brothers and Montgomery Securities.”

Originally conceived as an organization for leaders in urban school districts to share strategies, CORE gained notoriety when its eight districts (Los Angeles, Long Beach, San Francisco, Oakland, Santa Ana, Sanger, Sacramento City and Fresno) made a legally questionable side deal with US Secretary of Education Arne Duncan. In 2013 after Duncan denied California’s NCLB waiver request, the CORE districts – led by Los Angeles’s Broad Academy trained Superintendent John Deasy – came up with a separate accountability scheme from the state’s to obtain waivers.

CORE and PACE are financed by groups Diane Ravitch’s new book Slaying Goliath labels as disrupters.

Information Clipped from the Funders Pages of CORE and PACE

Promoting Education Growth Models

Tultican’s Fundamental Education Growth Model

The book An Incomplete Education tells of Thomas Carlyle stating that economics is “the dismal science.” Authors Judy Jones and William Wilson assert, “As with much that economists say, this statement is half true: It is dismal.” In previous eras, leaders had oracles to predict the future. Today, they have economists. People like Stanford’s Eric Hanushek (Economics PhD From MIT) have an outsized influence over education policy when compared with professionals who have spent their life practicing and studying education.

Dr. William Lester Sanders invented the Tennessee Value-Added Assessment System (TVAAS), also known as the Educational Value-Added Assessment System (EVAAS). At the University of Tennessee, Sanders applied the mathematical tools of an economist to animal studies and quantitative genetics. Sanders believed he could apply the same mathematical tools to evaluate schools and teachers. Since the early 1990’s Sanders multivariate growth model has been the gold standard for value-added models (VAM) used to assess teachers.

However, unlike the scientifically well behaved data associated with genetics study, standardized testing data is extremely noisy. The famed Australian researcher Noel Wilson wrote a seminal work in 1998 called “Educational Standards and the Problem of Error.” His peer reviewed paper which has never been credibly refuted says error in standardized testing is so large that meaningful inferences are impossible.

Wilson’s paper was followed a year later by a paper from UCLA’s Education Professor James Popham which stated, “Although educators need to produce valid evidence regarding their effectiveness, standardized achievement tests are the wrong tools for the task.”

However, the desire to control public education from state capitals and Washington DC required an inexpensive way to evaluate schools and educators. Standardized testing which had been promoted by the Clinton administration was enshrined into federal law by George W. Bush’s administration. Every state was required to ignore the professional warnings and institute standardized testing to evaluated schools. States were threatened with the loss of all federal education dollars if they did not comply.

Misguided testing policies forced the closing of many excellent public schools that were serving poor and minority communities. It was not long before it became very clear that the only reliable correlation with school testing data was student poverty. Last year, the famed education scholar Linda Hammond-Darling mentioned in an Ohio presentation,

“There’s about a 0.9 correlation between the level of poverty and test scores. So, if the only thing you measure is the absolute test score, then you’re always going to have the high poverty communities at the bottom and then they can be taken over.”

Now the public is being told that education models can control for poverty, language and other factors. It is said that these models provide a valid analysis of the performance of teachers, schools and districts. However, the noise associated with standardized testing data still leads to “garbage in – garbage out.”

In 2013, Professor Katherine E. Castellano, University of California, Berkeley and Professor Andrew D. Ho, Harvard Graduate School of Education wrote “A Practitioner’s Guide to Growth Models.” Their paper was commissioned by the Council of Chief State School Officers (major funding from the Bill and Melinda Gates Foundation). In it they identify and describe seven education growth models. These seven can be reduced to three unique models; gain model, residual-gain model and multivariate model.

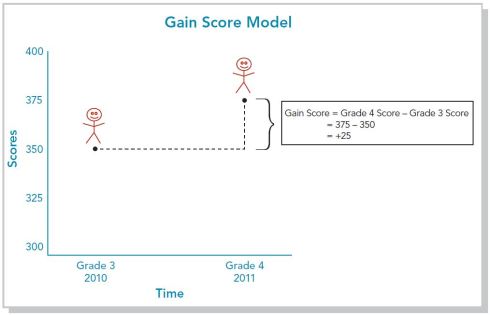

The gain model looks at gains individual students make from one year to the next by taking the difference in scores. Residual-gain models create an expected growth level and the data developed is the difference between the expected and the actual. The third model is the Sanders multivariate model which creates algorithms to control for student and school effects on testing results.

The simplest model to understand is the gain-model.

Graphic from the Practitioners Guide – Illustrating Gain-Model

Four problems in this model begin with noisy testing data input into the model. Second, a vertical scores scale must be created using many assumptions. Obviously, third grade data and forth grade data are from different tests so a vertical scale to compare the scores must be created. Third, this model requires unique identifier data for each student tested and finally, like all growth models, yearly testing of every student is required.

The gain-model is the least mathematically manipulated model with the least amount of assumptions required. The residual-gain model requires significantly more manipulation and the multivariate model is the most complex, manipulated and opaque of them all. None of the three models have been decisively shown to provide accurate analysis but strong evidence has emerged that they do not.

Jesse Rothstein is a professor of public policy and economics at University of California, Berkeley. In 2007 he used data from North Carolina to conduct a falsification test of various value added models. He ran the models to see what effect fifth grade teachers had on fourth grade learning. Shockingly he discovered, “In particular, these models indicate large “effects” of 5th grade teachers on 4th grade test score gains.” Whatever the three models he tested were measuring it clearly was not the teacher effect on learning.

An article by Linda Hammond Darling echoes a large number researchers who note the instability of VAM results. She shared, “A study examining data from five school districts found, for example, that of teachers who scored in the bottom 20% of rankings in one year, only 20% to 30% had similar ratings the next year, while 25% to 45% of these teachers moved to the top part of the distribution, scoring well above average.”

In 2014, the American Statistical Association weighed in on using VAMs to analyze educators and schools,

“The VAM scores themselves have large standard errors, even when calculated using several years of data. These large standard errors make rankings unstable, even under the best scenarios for modeling”.

Just this last November, another falsification study was run. Researchers Marianne Bitler Department of Economics University of California Davis, Thurston Domina School of Education UNC Chapel Hill, Sean Corcoran Vanderbilt University and Emily Penner University of California Irvine collaborated on the paper “Teacher Effects on Student Achievement and Height: A Cautionary Tale.” This paper which is still undergoing peer review used New York City student data to run a VAM study of teacher effects on student growth in height. They found, “Using a common measure of effect size in standard deviation units, we find a 1σ increase in ‘value-added’ on the height of New York City 4th graders is about 0.22σ, or 0.65 inches.” This effect size was found statistically significant when permutation testing was applied. The height effects measured were in the same range as the effects measured for math and English testing.

What is being measured? Certainly teachers do not have an effect on student growth in height.

Why are PACE and CORE Advocating Education Growth Models?

CORE is offering to run growth measures for California school districts using the residual-gain model. The banker with no professional background in education who is now superintendent of the nation’s second largest school district has taken CORE up on its offer. Austin Beutner says it’s a better way to judge a school’s impact on student learning.

Now multiple years of student data and personal information are being shipped off to CORE. What could go wrong?

CORE Executive Director, Rick Miller promises, “Through our partnership with Policy Analysis for California Education (PACE), we will also continue to share our quantitative and qualitative findings with state and federal decision makers to inform policy.”

The answer to why these billionaire funded organizations are leading the push for growth models in California is money and power. With student identified data and a required multiple consecutive years of testing there is big money to be made. And pro-school choice organizations like GO Public schools in Oakland like it because the model can then be used to justify privatizing more public schools. It is the No Child Left Behind test and punish scheme hidden behind obscure economic algorithms. However, it is more destructive and more misguided.

Twitter: @tultican

9 Responses to “Selling Education Growth Models”